Ben Bernanke has a good post on the Taylor Rule. Let’s start at the end, where he argues in favor of decision-making by the FOMC, rather than a rigid mechanical policy rule:

Monetary policy should be systematic, not automatic. The simplicity of the Taylor rule disguises the complexity of the underlying judgments that FOMC members must continually make if they are to make good policy decisions. Here are just a few examples (not an exhaustive list):

The Taylor rule assumes that policymakers know, and can agree on, the size of the output gap. In fact, as current debates about the amount of slack in the labor market attest, measuring the output gap is very difficult and FOMC members typically have different judgments. It would be neither feasible nor desirable to try to force the FOMC to agree on the size of the output gap at a point in time.

The Taylor rule also assumes that the equilibrium federal funds rate (the rate when inflation is at target and the output gap is zero) is fixed, at 2 percent in real terms (or about 4 percent in nominal terms). In principle, if that equilibrium rate were to change, then Taylor rule projections would have to be adjusted. As noted in footnote 2, both FOMC participants and the markets apparently see the equilibrium funds rate as lower than standard Taylor rules assume. But again, there is plenty of disagreement, and forcing the FOMC to agree on one value would risk closing off important debates.

The Taylor rule provides no guidance about what to do when the predicted rate is negative, as has been the case for almost the entire period since the crisis.

There is no agreement on what the Taylor rule weights on inflation and the output gap should be, except with respect to their signs. The optimal weights would respond not only to changes in preferences of policymakers, but also to changes in the structure of the economy and the channels of monetary policy transmission.

I don’t think we’ll be replacing the FOMC with robots anytime soon. I certainly hope not.

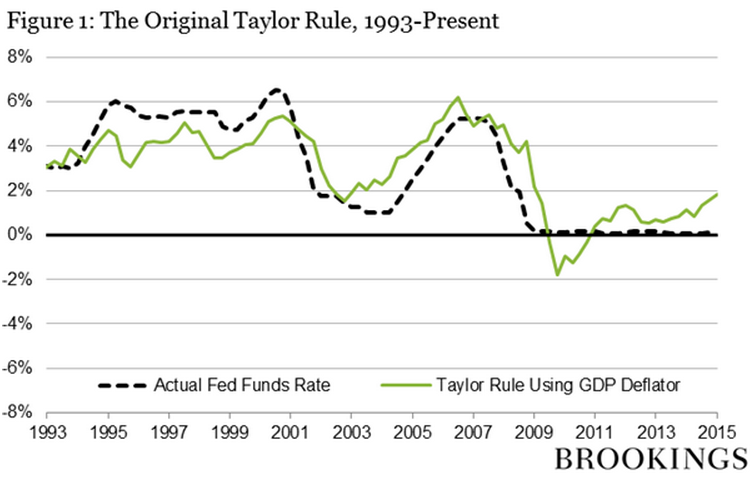

I believe that Bernanke is right about the Taylor Rule, but I don’t think he follows the implications of his arguments to their logical conclusion. If 12 FOMC members are better than a robot, then 12,000 are better than 12. But first let’s look at the graph Bernanke provides to illustrate the original Taylor Rule:

Then Bernanke provides a graph showing a modified Taylor Rule, which he argues is superior to the original:

You can see why Bernanke prefers the modified version. The original shows money too tight loose after 2011, which is completely implausible to anyone whose last name is not “Trichet.” Even with the fed funds rate at zero, we’ve fairly consistently underperformed the Fed’s inflation and employment targets. Money was too tight, not too easy. The modified version gets this right.

But even the modified version does poorly in 2008. It calls for a fed funds target much higher than the actual rate. You might think this 12-month period was no big deal; no rule is perfect. In fact, this was the most important period for Fed policy in my lifetime, when actual monetary policy was far too tight, indeed disastrously so. And the modified Taylor Rule called for policy to be much tighter still! (The original version is even worse.)

Fortunately Ben Bernanke and the FOMC noticed what was going on, and cut rates much faster than even the modified Taylor Rule would have called for. We avoided another 1929-33. Bernanke’s right, the committee is better than the robot. We need the human touch.

But 12,000 humans is much better than 12. Two days after Lehman failed the FOMC met and refused to cut rates from 2%, seeing a roughly equal risk of recession and inflation. The markets were already seeing the oncoming disaster, and indeed the 5 year TIPS spread was only 1.23% on the day of the meeting. The markets aren’t always right, but when events are moving very rapidly they will tend to outperform a committee of 12. In fairness, this “recognition lag” was not the biggest problem; two far bigger problems included a failure to “do whatever it takes” to “target the forecast.” That is, the Fed should move aggressively enough so that their own internal forecast remained at the policy goal. And the second failure was not engaging in “level targeting”, which would have helped stabilize asset prices in late 2008, and made the crisis less severe.

Bernanke once said there is nothing magical about 2% inflation. Nor is there anything magical about 12 members on the FOMC. The wisdom of crowds literature suggests you want a large number of voters, with monetary incentives to “vote” wisely. So there are actually three approaches. The Friedman/Taylor “robot” approach. The Bernanke “wise bureaucrats” approach. And the market monetarist “wisdom of the crowds” approach.

PS. I proposed using prediction markets to guide monetary policy in 1989 and 1995. Kevin Down made a similar proposal in 1994. In 1997 a paper by Michael Woodford and a co-author suggested that our proposals were susceptible to a circularity problem, and hence the central bank could not rely solely on market forecasts. That criticism was valid for my 1995 proposal, but not the 1989 paper, nor Dowd’s paper, both of which involved the markets predicting the instrument setting that would result in on-target NGDP growth (or inflation in Dowd’s case.)

Who was Woodford’s coauthor? A Princeton colleague named Ben Bernanke.

READER COMMENTS

Kenneth Duda

Apr 30 2015 at 4:50pm

I cannot press “+1” enough times. This is exactly right. We do not want a robot setting monetary policy, but nor do we want an opaque committee of 12 anointed ones. The best alternative is outsourcing to a prediction market. Harnessing human ingenuity, that’s what it’s all about.

-Ken

Felipe

Apr 30 2015 at 6:22pm

The original shows money too tight after 2011, which is completely implausible to anyone whose last name is not “Trichet.”

This would be so funny if it weren’t so sad…

Kevin Erdmann

Apr 30 2015 at 6:48pm

I think you meant “too loose” in the sentence Filipe excerpted above.

Michael Byrnes

Apr 30 2015 at 8:31pm

The original shows money too tight after 2011, which is completely implausible to anyone whose last name is not “Trichet.”

Or “Taylor”?

Scott Sumner

Apr 30 2015 at 11:35pm

Thanks Kevin, I’m going too fast. I changed it.

Jose Romeu Robazzi

May 3 2015 at 5:33pm

I am pleased to see somebody with Bernanke’s stature to come forward talking about the inherent dispersion on estimating output gap. But output gap is “The” most important input on an inflation target regime. Without getting it right, all is lost. He meant his post to be a criticism of rules based monetary policy, but what he actually did is a post against inflation targeting. Market monetarists should press on it.

Comments are closed.